My First Machine Learning Experiment

5 Jun 2018 12:22 PM IST

Last Updated: Wed, 06 Jun 2018 03:10 PM IST

Yesterday evening I wrote a small program in less than an hour and the results got me amazed. Not even the compiler I've been working on for months has presented me good outputs like this, it seems. To make things historical, this is the first machine learning experiment I've ever done.

The program, dubbed pypredictdomain, aims to learn the vocabulary of different domains (subjects), and tries to predict the domain of a new article. For example, if you teach it with a set of physics-related articles labeled physics and music-related articles labeled music, it will be able to predict the domain of a new physics-related article to be physics, without your help.

Such a program has important roles in many other projects like search engine development. I couldn't find a similar one in a quick search, but I'm sure there ought to be many.

Despite being important and having good results, the program is silly. It consists of two Python files measuring less than a hundred lines in total. To make things even simpler, there are no neural networks or any such fancy stuff. The only high-level libraries in use are BeautifulSoup and urllib2 (the os module is also a high-level wrapper for low-level OS features, but basic in a Python-viewpoint).

I could've written it years ago. Then why did it take so long? Because I didn't know what machine learning was, what actual AI code looked like, and how the beautiful idea of neural networks maps to a sequence of lines in some programming language. I'm still unsure of any of them (seriously) but I couldn't wait anymore.

Whenever I try to read about machine learning (online or offline), what I come across is a bunch of articles that provide instructions to work with some ready-made library. That's not the way I like it. I want to build from scratch. That doesn't imply you've to write your own compiler and OS. It may take a lifetime just to write a Hello World program that way. What I mean by `from scratch' here is the avoidance of libraries exclusively built for machine learning. You can use everything else.

I'm also aware that building from scratch is a bad practice when it comes to actual production. It takes a lot of time, introduces a thousand vulnerabilities, and wastes human resource. So there is no point in building from scratch unless you've got a strong reason. But while learning, there inherently is a reason: building from scratch is the only way you can understand how something works (stress on understand; not be aware of).

I usually don't search for code examples if I can avoid it, prior to creation. I rely on upstream documentation, and pay more attention on the security and performance warnings they give. Sometimes I look on others' code after writing my version successfully, just out of curiosity, also with the intention of improving my code (but I never copy-paste). I also visit forums like StackOverflow where I can learn common pitfalls and workarounds.

But sometimes I'm forced to search for sample programs because I've absolutely no idea on that domain. Writing a first-stage bootloader was one like that. Building a Qt app is still another. But unlike all these, a quick search for machine learning from scratch yields no useful result. Maybe because the field is very complex that you can develop nothing from scratch? I didn't like that idea. That's why I decided to perceive machine learning in its very basic sense and tried to write a program myself.

This program is perhaps the simplest you can have. You'll realize that once you see the code and learn how it works.

It is written as to files, learn.py and predict.py. The first script tries to build the domain-wise vocabulary while the second one tries to compare a given article with the vocabulary in memory in order to predict its domain.

Before going further, let me warn you: machine learning has progressed far and this experiment only considers its superficial definition. Speaking geeky, this is a machine learning Hello World program.

The Learning Process

Let's get into the details. Before running learn.py, you've to create a subdirectory named learn-urls in the same directory where learn.py resides, and keep the list of URLs related to different domains under files named with the domains. For example, learn-urls/physics (no extension) lists the URLs that point to physics-related cartels. learn-urls/music lists URLs of music-related articles. Note that these URLs should be readily readable HTML documents (probably XML and plaintext too), not some fancy AJAX page or a PDF file.

Now you can run learn.py. This is what it does:

- For each domain in domains:

- For each url in domain:

- Fetch the page using urllib2

- Parse the page uring BeautifulSoup

- Extract the text, split it into words and store them in an array dedicated for that domain

- Store the array in a file named with the domain under the directory learnt-vocabulary

- For each url in domain:

After this process, learnt-vocabulary/ contains a set of files like physics, music, etc., each containing probably a vast amount of words related to the corresponding domain, stored word-per-line.

Now it's time to check what the program has learned.

Domain Recognition

Just run predict.py and answer to the prompt that asks for a URL. Once the page is loaded, the script performs the analysis and tells you which domain it thinks the page belongs to.

The internals are like this:

- Read the files under learnt/vocabulary and build the domain-wise vocabulary

- Remove words common in all domains (ideas: intersection and difference)

- Read url

- Fetch the page, extract the text, and split into words

- Prepare a table showing how much of these words appear in each domain's vocabulary

- Display the domain(s) with the highest number of matches as the prediction

Trial

After forty minutes of coding, I was thrilled to see the first outcome. So I built a very minimal sample set for the learning part, and ran learn.py. Thanks to the simplicity of Python, only one or two bugfixes were all that needed.

The samples were from English Wikipedia. They contain lots of out-of-the-domain words like Edit and Word History, but such words will be filtered out by predict.py while removing common words, if all domains were taught with articles from Wikipedia.

Okay, here is the sample set:

learn-urls/music:

https://en.wikipedia.org/wiki/Piano https://en.wikipedia.org/wiki/Cello https://en.wikipedia.org/wiki/Raga https://en.wikipedia.org/wiki/John_Williams https://en.wikipedia.org/wiki/Sonata

learn-urls/physics:

https://en.wikipedia.org/wiki/Velocity https://en.wikipedia.org/wiki/Impulse_(physics) https://en.wikipedia.org/wiki/Classical_mechanics https://en.wikipedia.org/wiki/Acceleration https://en.wikipedia.org/wiki/Power_(physics) https://en.wikipedia.org/wiki/Energy_(physics) https://en.wikipedia.org/wiki/Sun

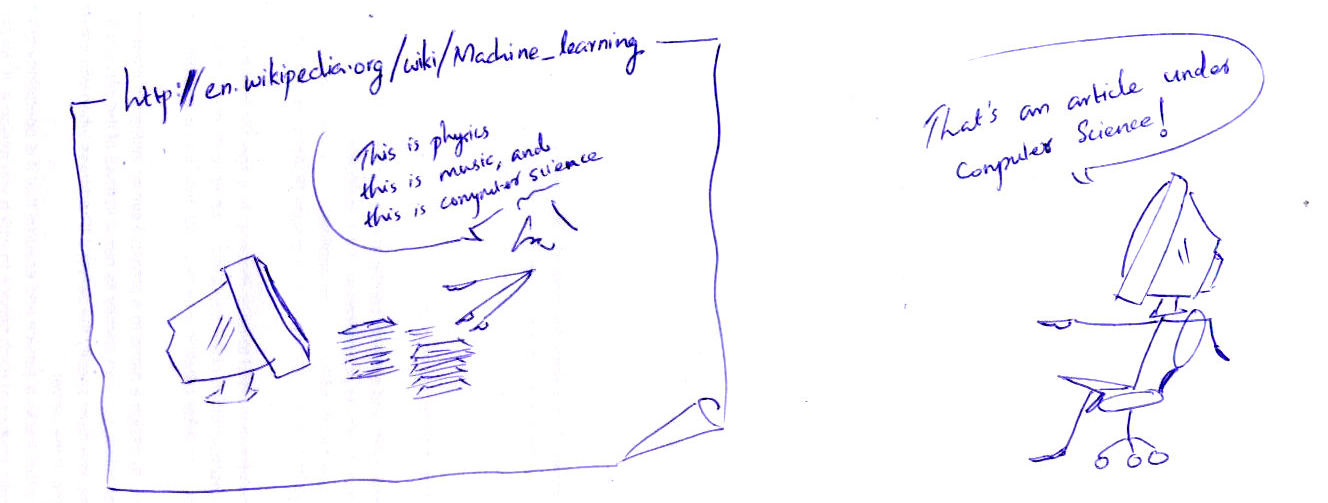

Then I ran predict.py, and it took 5-10 minutes to bugfix it. Despite having a poor learning sample set, it proved to be capable of doing of what it ought to do:

Reading vocabulary of the domain: music Reading vocabulary of the domain: physics Words in the domain music before removing the common words: 18331 Words in the domain music after removing the common words: 12344 Words in the domain physics before removing the common words: 22183 Words in the domain physics after removing the common words: 10764 Build the vocabulary. Enter a URL: https://en.wikipedia.org/wiki/Moon <SOME UNWANTED OUTPUT FROM BeautifulSoup> Matches in the domain of music: 10874 Matches in the domain of physics: 12667 The given article might be of the following domain(s): physics

And this was the output for https://en.wikipedia.org/wiki/Cantata:

Matches in the domain of music: 8378 Matches in the domain of physics: 7120 The given article might be of the following domain(s): music

Later I expanded the samples with more domains like Computer Science and Dinosaur, and found the program to be successful. Today morning, it even predicted this post to be under computer-science.

Sure, I haven't given any challenging or confusing URLs to predict.py, but this is enough for a start.

The Code

I paste the code here, just for completeness. They might be still buggy. For one thing, there is no error handler for disk I/O errors. Hope I can come back and update this post with the modified versions.

learn.py

#!/usr/bin/env python

# learn.py

# This code is part of pypredictdomain, a machine learning experiment to

# recognize the domain (subject) of an online article, given as a URL.

# Copyright (C) 2018 Nandakumar Edamana

# License: GNU General Public License version 3

# Written on Mon, 4 Jun 2018

# Last modified: Tue, 05 Jun 2018 09:55:47 +0530

import os, urllib2

from bs4 import BeautifulSoup

vocabulary = {}

domains = os.listdir('learn-urls') # TODO error handling

for domain in domains:

print('Learning the domain: ' + domain)

vocabulary[domain] = [] # Empty list for domain

for url in open('learn-urls/' + domain).readlines(): # TODO error handling

url = url[0:-1] # Cut the newline

print('URL: ' + url)

# Fetch and parse the page

fp = urllib2.urlopen(url) # TODO error handling

bs = BeautifulSoup(fp.read())

fp.close()

# Extract words and store to the appropriate array

pagetext = bs.get_text()

words = pagetext.split(' ')

for w in words:

# Insert if not present already

if vocabulary[domain].__contains__(w) == False:

vocabulary[domain].append(w)

# XXX Removal of common words (across domains) should not be done while

# learning (i.e., in this script), because adding a new article

# with previously deleted common words would cause those

# words to be added to a domain exclusively.

print('Writing vocabulary to disk...')

fp = open('learnt-vocabulary/' + domain, 'w')

for w in vocabulary[domain]:

fp.write(w.encode('utf-8') + '\')

fp.close()

print('Done.')

print('===\\')

predict.py

#!/usr/bin/env python

# predict.py

# This code is part of pypredictdomain, a machine learning experiment to

# recognize the domain (subject) of an online article, given as a URL.

# Copyright (C) 2018 Nandakumar Edamana

# License: GNU General Public License version 3

# Written on Mon, 4 Jun 2018

# Last modified: Wed, 06 Jun 2018 15:04:13 +0530

# (moved from sets.Set() to set())

import os, urllib2

from bs4 import BeautifulSoup

vocabulary = {}

domains = os.listdir('learn-urls') # TODO error handling

matches = {} # Count of matching words in each domain

for domain in domains:

print('Reading vocabulary of the domain: ' + domain)

vocabulary[domain] = [] # Empty list for domain

for w in open('learnt-vocabulary/' + domain).readlines(): # TODO error handling

vocabulary[domain].append(w[0:-1]) # Cut the newline before adding

# Find the common words

common = set()

for domain in domains:

common.intersection(set(vocabulary[domain])) # XXX not union

# Remove common words

for domain in domains:

print('Words in the domain ' + domain + ' before removing the common words: ' + str(len(vocabulary[domain])))

# Now take the difference

vocabulary[domain] = list(set(vocabulary[domain]).difference(common))

print('Words in the domain ' + domain + ' after removing the common words: ' + str(len(vocabulary[domain])))

print('Build the vocabulary.\')

url = raw_input('Enter a URL: ')

# Fetch and parse the page

fp = urllib2.urlopen(url) # TODO error handling

bs = BeautifulSoup(fp.read())

fp.close()

# Extract words and store to the appropriate array

pagetext = bs.get_text()

words = pagetext.split(' ')

# Perform matching

highmatches = 0

for domain in domains:

matches[domain] = 0

for w in words:

# Insert if not present already

if vocabulary[domain].__contains__(w.encode('utf-8')) == True:

matches[domain] += 1

print('Matches in the domain of ' + domain + ': ' + str(matches[domain]))

if matches[domain] > highmatches:

highmatches = matches[domain]

print('The given article might be of the following domain(s): ')

for domain in domains:

if matches[domain] == highmatches:

print(domain)

Limitations and Future Work

- Articles from untaught domains will confuse the program; it cannot even tell untaught domains apart.

- Compound words are not considered now

- Only domains with highest and same match count is listed as the prediction; sorting of the match count can be useful in multi-domain articles

- Yet to validate the textual output of BeautifulSoup. (Does it dump JavaScript in between? I think I saw it once.)

- Performance and optimization yet to be considered

Nandakumar Edamana

Tags: machine learning, artificial intelligence, python, beautifulsoup, urllib2, experiment, technology

Read more from Nandakumar at nandakumar.org/blog/